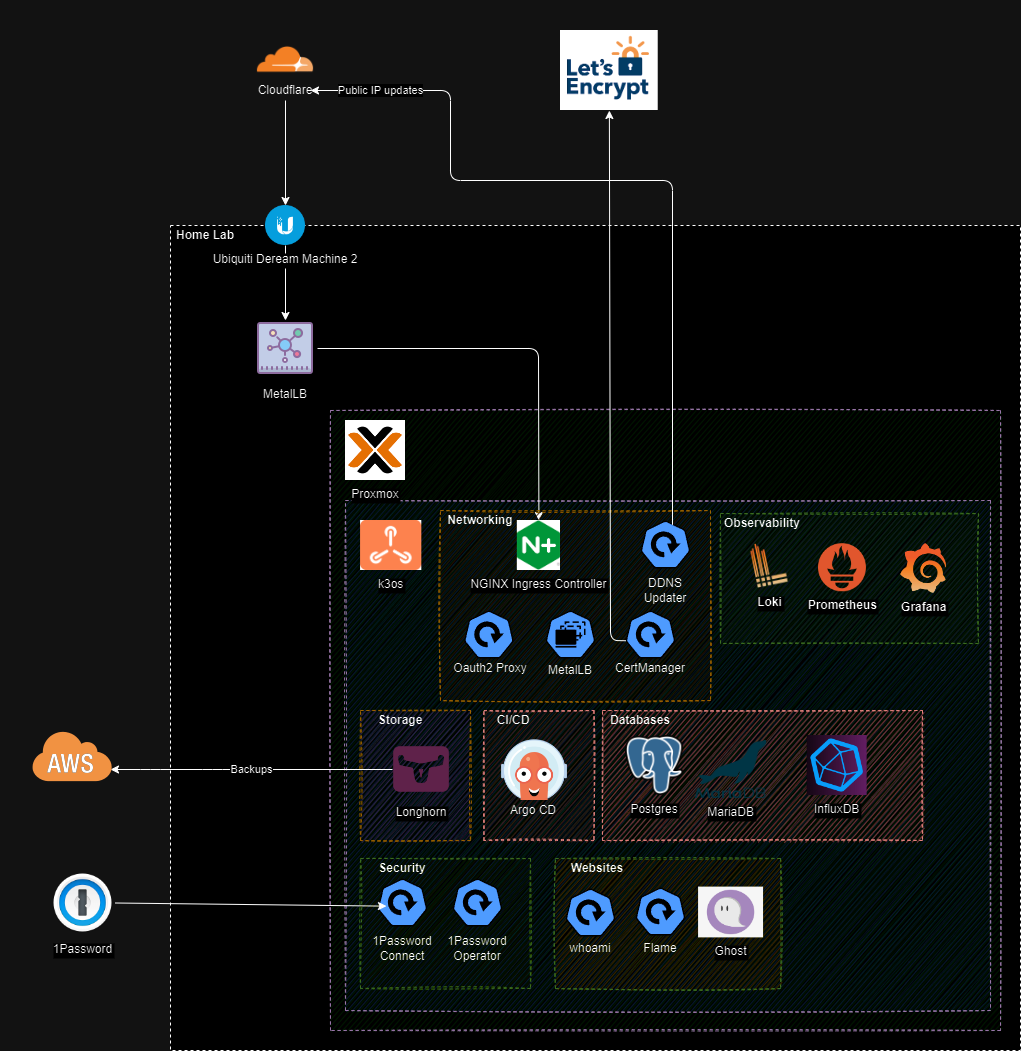

So it's that time of year again: the year's winding to an end, I've got some PTO days to burn, and my home lab looks something like this:

So in the best spirit of end-of-year reorganization, I spent some time reorganizing and rebuilding my home computing lab. Along the way I explored some new technology, learned some lessons, and achieved the late-stage career goal of any software engineer: to start a blog become an influencer*.

* = sarcasm very much intended.

Design goals

Medium is essentially free. A Ghost Pro hosting plan costs $9/month. Over a sufficiently long time, both will have better uptime and updates. So what do I want to achieve by running my own blog?

- Bootstrap a Kubernetes cluster. - K8s is a great platform with a thriving ecosystem (both professionally and for self-hosting software). You can get basic platform features (ingresses w/SSL certs, monitoring & observability, storage & databases) for not a ton of work.

- Sandboxing GitOps - I've been elbows-deep in bootstrapping a new CI/CD architecture at ASAPP. Using GitOps (via ArgoCD) gives me somewhere to explore/test new ideas - and makes it easier to maintain my home lab moving forward.

- Minimize ongoing cost - I already own the hardware (mainly an Intel NUC purchased pre-pandemic chip shortage and Ubiquiti Dream Machine Pro) so costs are already well and truly sunk. It'd be a shame not to use them! Also, as a fun exercise, take advantage of free services (Let's Encrypt), free tiers (Cloudflare) whenever possible.

What I've built

The cluster currently has 72(!) pods spread across 3 Kubernetes nodes.

A few notes on different domains!

k3os

After initially exploring Talos (more on that in the future), I settled on using k3os as my Linux distribution and Kubernetes distribution all-in-one. I was able to use Proxmox's Cloud-Init support (big shoutout to Dustin Rue's article) to inject k3os configuration files into VMs.

On the initial node, my configuration looked something like this:

ssh_authorized_keys:

- github:YOUR_GITHUB_USER

hostname: k8s-node-1

k3os:

data_sources:

- cdrom

modules:

- kvm

- nfs

dns_nameservers:

- 8.8.8.8

- 1.1.1.1

ntp_servers:

- 0.us.pool.ntp.org

- 1.us.pool.ntp.org

password: YOUR_PASSWORD

k3s_args:

- server

- "--cluster-init"

- "--disable"

- "traefik"

- "--disable"

- "servicelb"On the other nodes, it looks something like this:

ssh_authorized_keys:

- github:YOUR_GITHUB_USER

hostname: k8s-node-2

k3os:

data_sources:

- cdrom

modules:

- kvm

- nfs

dns_nameservers:

- 8.8.8.8

- 1.1.1.1

ntp_servers:

- 0.us.pool.ntp.org

- 1.us.pool.ntp.org

password: YOUR_PASSWORD

server_url: https://YOUR_SERVER:6443

token: "NODE_TOKEN"

k3s_args:

- server

- "--disable"

- "traefik"

- "--disable"

- "servicelb"

Done three times, this will give a 3-node highly available Kubernetes cluster.

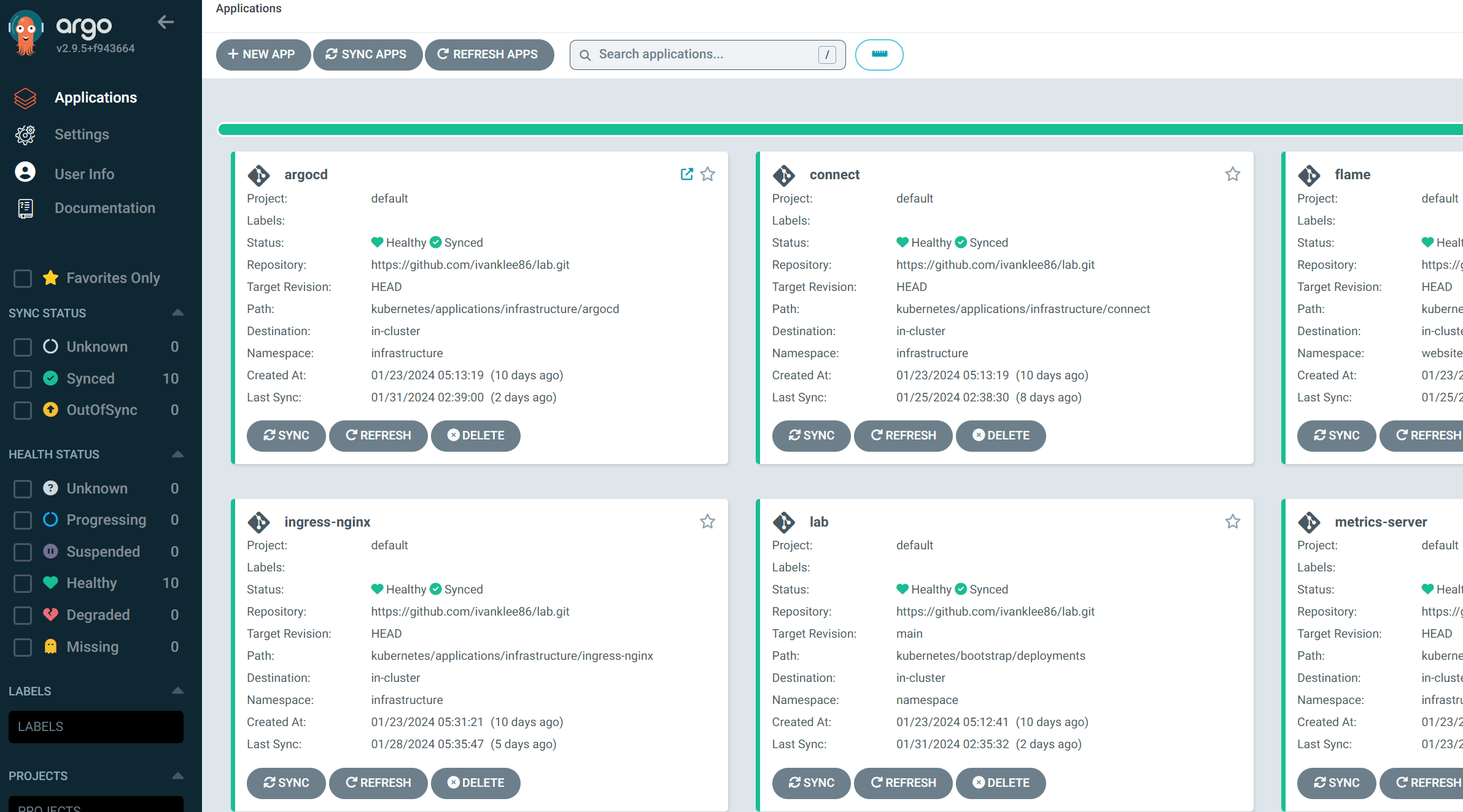

Continuous Deployment with ArgoCD

I'm using ArgoCD to GitOps-ify my Kubernetes cluster. The ArgoCD ApplicationSet Controller lets you quickly deploy new manifests or charts by allowing you to DRY your Applications. The following is an example ApplicationSet deploying my monitoring stack and integrating ArgoCD Notifications annotations to alert me of any failures.

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: applicationset-monitoring

labels:

type: controller

spec:

generators:

- list:

elements:

- appName: "prometheus"

namespace: "monitoring"

path: "kubernetes/charts/prometheus"

- appName: "grafana"

namespace: "monitoring"

path: "kubernetes/charts/grafana"

- appName: "loki"

namespace: "monitoring"

path: "kubernetes/charts/loki"

- appName: "promtail"

namespace: "monitoring"

path: "kubernetes/charts/promtail"

template:

metadata:

name: "{{appName}}"

namespace: argocd

annotations:

notifications.argoproj.io/subscribe.on-health-degraded.slack: argo

notifications.argoproj.io/subscribe.on-sync-failed.slack: argo

notifications.argoproj.io/subscribe.on-sync-status-unknown.slack: argo

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

source:

repoURL: https://github.com/ivanklee86/homelab

targetRevision: HEAD

path: "{{path}}"

destination:

server: https://kubernetes.default.svc

namespace: "{{namespace}}"

syncPolicy:

automated:

prune: true

selfHeal: trueI'm using another Application to manage the ApplicationSet (all neatly packaged as a Helm chart).

Networking

The trickiest (and by far longest) part was getting the networking right. I used the following "chain" of SAAS tools, hardware, and applications to set up a reverse proxy with Let'sEncrypt SSL certs and OAuth.

- Cloudflare for DNS, WAF, and DDOS mitigation.

- An Ubiquiti Dream Machine Pro for firewall and security. (And a small adventure into small business networking!)

- MetalLB to act as a network load balancer. The firewall forwards Port 443/80 to it's IP. (Note: It's important to disable the load balancer that ships with k3s using

--disable servicelb.) - Ingress-Nginx to act as a reverse proxy.

- cert-manager to manage Let'sEncrypt SSL certificates.

- Oauth2-Proxy to configure SSO (Github in my case) for applications that don't ship with their own auth.

- ddns-updater for a lightweight DDNS Updater to keep my DNS pointed at my private IP.

The end result is to make it relatively quick and easy to deploy and secure applications such as Flame (a personal dashboard).